FRIB SOLARIS Collaboration: Difference between revisions

| (94 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{| align="right" | |||

| [[File:Back view of SOLARIS DAQ.png|thumb]] | |||

| [[File:Front view of SOLARIS DAQ.png|thumb]] | |||

|} | |||

The collaboration focuses on the development of the DAQ for the SOLARIS spectrometer. | The collaboration focuses on the development of the DAQ for the SOLARIS spectrometer. | ||

| Line 5: | Line 10: | ||

A web page is created for a simulation. [https://fsunuc.physics.fsu.edu/SOLARIS/ Here] | A web page is created for a simulation. [https://fsunuc.physics.fsu.edu/SOLARIS/ Here] | ||

= Hardware = | = Hardware = | ||

| Line 42: | Line 15: | ||

! Item !! Config !! size | ! Item !! Config !! size | ||

|- | |- | ||

| rowspan = " | | rowspan = "6" | Rack server || Dell PowerEdge R7525 || rowspan = "6" | 2U | ||

|- | |- | ||

| AMD EPYC 7302 3.0 GHz 16C/32T x 2 = 64 cores | | AMD EPYC 7302 3.0 GHz 16C/32T x 2 = 64 cores | ||

| Line 51: | Line 24: | ||

|- | |- | ||

| 10Gb/s ethernet dual ports | | 10Gb/s ethernet dual ports | ||

|- | |||

| 2 x 800 W power supply | |||

|- | |- | ||

| Mass storage || 2 X (16 TB HDD x 6 (Raid 10) = 48 TB + 48 TB fail-safe) || | | Mass storage || 2 X (16 TB HDD x 6 (Raid 10) = 48 TB + 48 TB fail-safe) || | ||

| Line 56: | Line 31: | ||

| Temp storage || 8 TB SSD SATA || | | Temp storage || 8 TB SSD SATA || | ||

|- | |- | ||

| UPS || TRIPPLITE | | UPS || TRIPPLITE SU3000RTXLCD3U, 3000VA 2700W || 3U | ||

|- | |- | ||

| Network || 24-port PPoE 1Gb switch + 8-port 10Gb switch || | | Network || 24-port PPoE 1Gb switch + 8-port 10Gb switch || | ||

| Line 66: | Line 41: | ||

The rack server is a Dell PowerEdge R7525. It has total of 64 cores with 3.0 GHz. | The rack server is a Dell PowerEdge R7525. It has total of 64 cores with 3.0 GHz. | ||

[[:File:Poweredge-r7525-technical-guide-compressed.pdf|Poweredge-r7525-technical-guide]] | |||

=== storage === | === storage === | ||

| Line 74: | Line 51: | ||

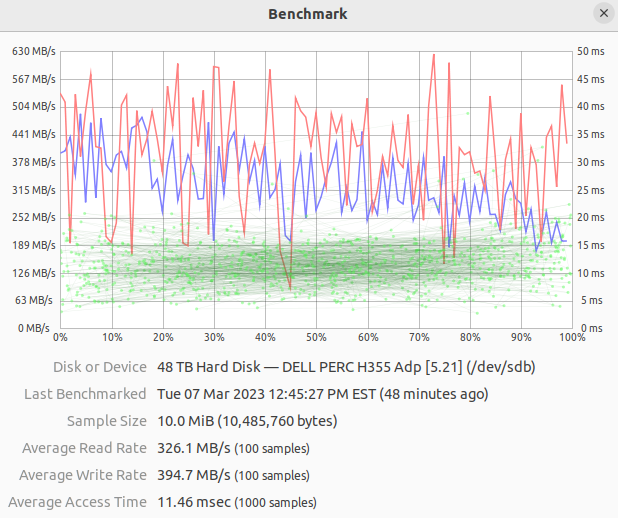

12 x 16TB hard disks were installed in the front panel. They are divided into 2 groups, 6 for raw data storage, 6 for trace analysis. | 12 x 16TB hard disks were installed in the front panel. They are divided into 2 groups, 6 for raw data storage, 6 for trace analysis. | ||

Both groups are RAID10 array. They have read/write speed of 326/394 MB/s. In theory, it can support 3 | Both groups are RAID10 array. They have read/write speed of 326/394 MB/s. In theory, it can support 3 x 1 Gb/s in full speed. | ||

[[File:Disk benchmark for the SOLARIS RAID10 array.png|Disk benchmark for the SOLARIS RAID10 array.png]] | [[File:Disk benchmark for the SOLARIS RAID10 array.png|Disk benchmark for the SOLARIS RAID10 array.png]] | ||

| Line 85: | Line 62: | ||

=== local network === | === local network === | ||

The server has 2 | The server has 2 ports of 10Gb/s Ethernet. One is connected to an 8-port 10Gb/s switch for digitizers. another one is connected to the Mac studio. | ||

There are 2 short PCI slots and 2 normal PCI slots for future extensions, such as 25Gb/s optical fiber ports, or additional 10Gb/s Ethernet ports. | There are 2 short PCI slots and 2 normal PCI slots for future extensions, such as 25Gb/s optical fiber ports, or additional 10Gb/s Ethernet ports. | ||

= | === Data Writing speed === | ||

Although each digitizer has 1Gb/s Ethernet port, the actual data rate would be far lesser. In the present setting, 4 digitizers are connected to the 1Gb/s switch and the maximum data rate is 125 MB/s/digitizer. So the maximum data rate is 500 MB/s. This is already larger than the RAID array write speed (approx. 400 MB/s). Therefore, the 8 TB SSD (approx. 500 MB/s) should be used for temporary storage. | |||

One possibility is to combine the two RAID arrays into one, which will make the write speed approx. 800 MB/s. | |||

Another possibility is to replace one of the raid arrays with SSD, it will give the write speed approx. to 2 GB/s, with a reduction of disk size. | |||

=== Future Upgrade to 100Gb/s data rate === | |||

After CAEN upgraded the firmware to support 10 Gb/s ethernet, the data rate for 10 Digitizer could max to 100Gb/s to 12.5 GB/s, which the current system did not support. | |||

A possible upgrade is using 2 PCIe cards, one for a 100 Gb/s QSPF optical fiber, and another for 2 or 4 NMVe M.2 SSD RAID array card. The write speed for each NMVe M.2 SSD should be at least 7 GB/s. | |||

And a new network switch is needed. The switch should have 10x 10Gb/s port + 1x 100Gb QSPF port for optical fiber. | |||

= Network = | |||

[[File:Network configuration of SOLARIS DAQ.png|thumb]] | |||

The DAQ is the central server for the network. It has two 1G ports and two 10G ports. The left 1G port (from the back view) is used to connect to internet. When n ANL, it connects to onenet with IP 192.168.203.171). The left 10GB port (with domain 192.168.0.XXX) connect to a 10G switch and then connect to digitizes. The other 10G port (with domain 192.168.1.XXX) connects to others devices. | |||

= FSU SOLARIS DAQ = | |||

Please see [[FSU SOLARIS DAQ]] | |||

= | = FSU SOLARIS Analysis Package = | ||

Since the data format of the FSU SOLARIS DAQ is custom designed. An Event builder and the following analysis pipeline are also provided. | |||

// | The code can be found at the FSU git repository : [https://fsunuc.physics.fsu.edu/git/rtang/SOLARIS_Analysis SOLARIS Analysis] | ||

The basic pipeline is following the [ https://github.com/calemhoffman/digios ANL HELIOS] code with much more improvements. ( it may no longer be true as the HELIOS code can/will be updated based on the SOLARIS code development) | |||

== Folder structure == | |||

Unlike the HELIOS code, where the daq and analysis are packed together, the daq and analysis are separated for SOLARIS. But the basic folder structure for the analysis are the same. | |||

Analysis | |||

├── SOLARIS.sh // It is recommended to source the SOLARIS.sh in the user .bashrc. so that the env variable SOLARISANADIR is there | |||

├── SetupNewExp // Switching or creating a new experiment. Its function is the same in the DAQ. | |||

├── cleopatra // Simulation package with the Ptolemy DWBA code. | |||

├── armory // All the weapons are stored to analyze the data. The analysis code in this folder is not experiment specified. | |||

├── data_raw // symbolic link to raw data | |||

├── root_data // symbolic link to root_data | |||

├── WebSimHelper // folder for web simulation | |||

└── working // All experimental specific files. This folder should contains all kind of configurations. | |||

├── ChainMonitors.C // List of root files for data process | |||

├── Mapping.h | |||

├── SimHelper // symbolic link to ../cleopatra/SimHelper.C | |||

└── Monitor.C/H | |||

== Analysis Pipeline == | |||

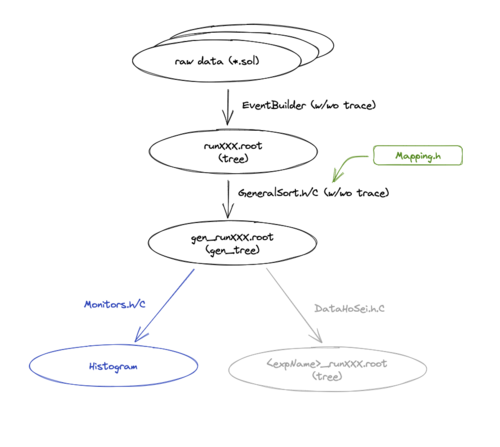

[[File:Analysis PipeLine.png|500px|thumb|right|A flow diagram for the analysis pipeline]] | |||

The pipeline is shown in the left figure. | |||

rawdata -- EventBuilder --> runXXX.root -- GeneralSort --> gen_runXXX.root -- Monitor.C --> histograms | |||

=== Event Builder === | |||

The raw data is already time sorted and each digitizer has its own output files (split into 2 GB). | |||

Using the SolReader Class, the raw data can be read block by block. | |||

The output of the Event Builder is root file (name runXXX.root) that included a TTree and a TMacro for timestamp information. | |||

The trace is stored as a fixed-length array of 2500. | |||

=== GeneralSort === | |||

This applies the following to the runXXX.root | |||

# Mapping.h | |||

# Use TGraph to store the trace | |||

# Support fitting of the trace | |||

# Support multi-thread parallel processing. | |||

=== Monitor.C === | |||

Latest revision as of 16:18, 25 June 2025

The collaboration focuses on the development of the DAQ for the SOLARIS spectrometer.

Kinematic with DWBA Simulation

A web page is created for a simulation. Here

Hardware

| Item | Config | size |

|---|---|---|

| Rack server | Dell PowerEdge R7525 | 2U |

| AMD EPYC 7302 3.0 GHz 16C/32T x 2 = 64 cores | ||

| 16 GB x 8 3200 GHz RAM = 128 GB | ||

| 12 (front) + 2 (rear) 3.5" HDD slots | ||

| 10Gb/s ethernet dual ports | ||

| 2 x 800 W power supply | ||

| Mass storage | 2 X (16 TB HDD x 6 (Raid 10) = 48 TB + 48 TB fail-safe) | |

| Temp storage | 8 TB SSD SATA | |

| UPS | TRIPPLITE SU3000RTXLCD3U, 3000VA 2700W | 3U |

| Network | 24-port PPoE 1Gb switch + 8-port 10Gb switch | |

| Mac | Max studio (2023 version, M1 Ultra 20-core, 48-core GPU, 32-core Neural Engine, 64GB RAM, 4TB SSD) |

Rack Server

The rack server is a Dell PowerEdge R7525. It has total of 64 cores with 3.0 GHz.

Poweredge-r7525-technical-guide

storage

The OS disk is at one of the rear HD slot. It has 1.98 TB capacity and Ubuntu 22.04 was installed.

There is another slot at the rear. but it is using small from factor. We have a 8 TB SSD waiting.

12 x 16TB hard disks were installed in the front panel. They are divided into 2 groups, 6 for raw data storage, 6 for trace analysis. Both groups are RAID10 array. They have read/write speed of 326/394 MB/s. In theory, it can support 3 x 1 Gb/s in full speed.

OS, software, and services

An Ubuntu 22.04 is used for the OS.

Internal:SOLARIS Rack DAQ Setup

local network

The server has 2 ports of 10Gb/s Ethernet. One is connected to an 8-port 10Gb/s switch for digitizers. another one is connected to the Mac studio.

There are 2 short PCI slots and 2 normal PCI slots for future extensions, such as 25Gb/s optical fiber ports, or additional 10Gb/s Ethernet ports.

Data Writing speed

Although each digitizer has 1Gb/s Ethernet port, the actual data rate would be far lesser. In the present setting, 4 digitizers are connected to the 1Gb/s switch and the maximum data rate is 125 MB/s/digitizer. So the maximum data rate is 500 MB/s. This is already larger than the RAID array write speed (approx. 400 MB/s). Therefore, the 8 TB SSD (approx. 500 MB/s) should be used for temporary storage.

One possibility is to combine the two RAID arrays into one, which will make the write speed approx. 800 MB/s.

Another possibility is to replace one of the raid arrays with SSD, it will give the write speed approx. to 2 GB/s, with a reduction of disk size.

Future Upgrade to 100Gb/s data rate

After CAEN upgraded the firmware to support 10 Gb/s ethernet, the data rate for 10 Digitizer could max to 100Gb/s to 12.5 GB/s, which the current system did not support.

A possible upgrade is using 2 PCIe cards, one for a 100 Gb/s QSPF optical fiber, and another for 2 or 4 NMVe M.2 SSD RAID array card. The write speed for each NMVe M.2 SSD should be at least 7 GB/s.

And a new network switch is needed. The switch should have 10x 10Gb/s port + 1x 100Gb QSPF port for optical fiber.

Network

The DAQ is the central server for the network. It has two 1G ports and two 10G ports. The left 1G port (from the back view) is used to connect to internet. When n ANL, it connects to onenet with IP 192.168.203.171). The left 10GB port (with domain 192.168.0.XXX) connect to a 10G switch and then connect to digitizes. The other 10G port (with domain 192.168.1.XXX) connects to others devices.

FSU SOLARIS DAQ

Please see FSU SOLARIS DAQ

FSU SOLARIS Analysis Package

Since the data format of the FSU SOLARIS DAQ is custom designed. An Event builder and the following analysis pipeline are also provided.

The code can be found at the FSU git repository : SOLARIS Analysis

The basic pipeline is following the [ https://github.com/calemhoffman/digios ANL HELIOS] code with much more improvements. ( it may no longer be true as the HELIOS code can/will be updated based on the SOLARIS code development)

Folder structure

Unlike the HELIOS code, where the daq and analysis are packed together, the daq and analysis are separated for SOLARIS. But the basic folder structure for the analysis are the same.

Analysis ├── SOLARIS.sh // It is recommended to source the SOLARIS.sh in the user .bashrc. so that the env variable SOLARISANADIR is there ├── SetupNewExp // Switching or creating a new experiment. Its function is the same in the DAQ. ├── cleopatra // Simulation package with the Ptolemy DWBA code. ├── armory // All the weapons are stored to analyze the data. The analysis code in this folder is not experiment specified. ├── data_raw // symbolic link to raw data ├── root_data // symbolic link to root_data ├── WebSimHelper // folder for web simulation └── working // All experimental specific files. This folder should contains all kind of configurations. ├── ChainMonitors.C // List of root files for data process ├── Mapping.h ├── SimHelper // symbolic link to ../cleopatra/SimHelper.C └── Monitor.C/H

Analysis Pipeline

The pipeline is shown in the left figure.

rawdata -- EventBuilder --> runXXX.root -- GeneralSort --> gen_runXXX.root -- Monitor.C --> histograms

Event Builder

The raw data is already time sorted and each digitizer has its own output files (split into 2 GB).

Using the SolReader Class, the raw data can be read block by block.

The output of the Event Builder is root file (name runXXX.root) that included a TTree and a TMacro for timestamp information.

The trace is stored as a fixed-length array of 2500.

GeneralSort

This applies the following to the runXXX.root

- Mapping.h

- Use TGraph to store the trace

- Support fitting of the trace

- Support multi-thread parallel processing.